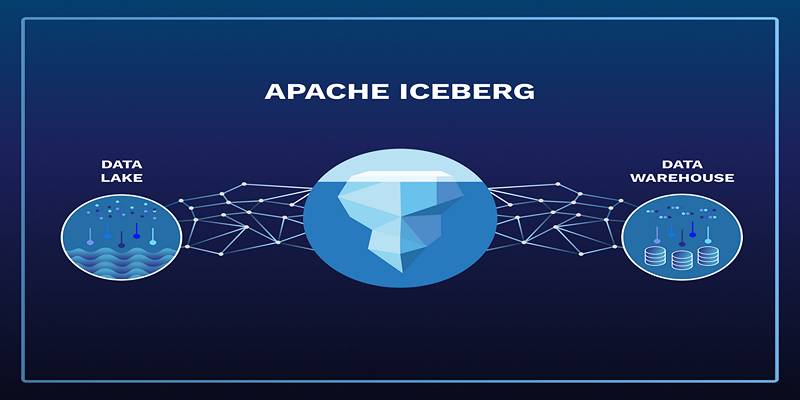

Managing large-scale datasets can be difficult, especially when dealing with performance, consistency, and scalability. Apache Iceberg makes it easier by offering a powerful table format for big data systems like Apache Spark, Flink, Trino, and Hive. It allows data engineers and analysts to query, insert, and update data easily without worrying about the complications of traditional table formats like Hive. This post will guide you through how to use Apache Iceberg tables, from basic setup to common operations, all explained in a very simple way.

What is Apache Iceberg?

Apache Iceberg is a table format for large-scale data analytics. It organizes data in a way that allows it to be queried reliably, updated efficiently, and maintained easily—even across multiple compute engines like Apache Spark, Flink, Trino, and Hive.

Originally developed at Netflix, Iceberg helps solve the challenges of unreliable table formats in data lakes. It ensures consistent performance, easy schema updates, and safe, versioned access to massive datasets. Iceberg allows data engineers and analysts to focus on data quality and consistency without worrying about the technical challenges of managing massive data lakes.

Why Use Iceberg Tables?

Using Apache Iceberg tables in data lakes comes with a wide range of advantages:

- Reliable Querying: Data can be queried consistently across multiple engines.

- Schema Evolution: Columns can be added, renamed, or deleted without impacting performance or historical data.

- Time Travel: Previous versions of data can be accessed for auditing or rollback.

- Partition Flexibility: Iceberg supports hidden partitioning, so users don’t need to hardcode partition filters.

- High Performance: It reduces the number of small files and optimizes large scans.

These features make Iceberg ideal for businesses working with petabytes of data or complex pipelines.

Key Concepts Behind Iceberg

Before implementing Iceberg, it is essential to understand the following core concepts:

Table Format

Iceberg uses a metadata-driven structure. It maintains a set of metadata files to track data files and their layout. These files help the table understand which data belongs to which version or snapshot.

Snapshots

Every time a change is made to a table—such as inserting, deleting, or updating data—a new snapshot is created. This snapshot allows users to go back in time and see how the table looked previously.

Partitioning

Unlike traditional formats, Iceberg allows automatic and hidden partitioning. It simplifies query writing and improves performance by avoiding unnecessary full table scans.

Setting Up Apache Iceberg

Apache Iceberg supports several engines. To use it, a user must choose the correct integration based on their environment.

Step 1: Choose a Processing Engine

Iceberg supports the following engines:

- Apache Spark

- Apache Flink

- Trino (formerly PrestoSQL)

- Apache Hive

Each engine comes with its own setup process, but they all share the same table format underneath.

Step 2: Add Required Dependencies

For Spark users, Iceberg support can be added via:

spark-shell \

--packages org.apache.iceberg:iceberg-spark-runtime-3.3_2.12:1.4.0

Flink users must include the Iceberg connector JAR. Similarly, Trino and Hive users must configure their catalogs to recognize Iceberg tables.

Creating Iceberg Tables

Once the environment is set up, users can begin creating Iceberg tables using SQL or code, depending on the engine in use.

Create Iceberg Tables in Spark or Trino

Below is an example using SQL syntax in Spark or Trino:

SQL-Based Table Creation

CREATE TABLE catalog_name.database_name.table_name (

user_id BIGINT,

username STRING,

signup_time TIMESTAMP

)

USING iceberg

PARTITIONED BY (days(signup_time));

This example creates a partitioned table, enabling efficient filtering and faster queries.

Performing CRUD Operations with Iceberg

Apache Iceberg supports full data manipulation functionality, allowing insert, update, and delete operations to be performed safely and efficiently.

Insert Data

INSERT INTO database_name.table_name VALUES (1, 'Alice', current_timestamp());

Update Data

UPDATE database_name.table_name

SET username = 'Alicia'

WHERE user_id = 1;

Delete Data

DELETE FROM database_name.table_name WHERE user_id = 1;

These operations are executed as transactions and create new snapshots under the hood.

Using Time Travel in Iceberg

One of Iceberg's most powerful features is the ability to go back in time to previous versions of a table.

Query a Previous Snapshot

SELECT * FROM database_name.table_name

VERSION AS OF 192837465; -- snapshot ID

Or by timestamp:

SELECT * FROM database_name.table_name

TIMESTAMP AS OF '2025-04-01T08:00:00';

Time travel is helpful for auditing, debugging, or recovering from bad writing.

Evolving Table Schema

Iceberg supports schema evolution, allowing users to update the table structure over time without affecting older data.

Add Column

ALTER TABLE database_name.table_name ADD COLUMN user_email STRING;

Drop Column

ALTER TABLE database_name.table_name DROP COLUMN user_email;

Rename Column

ALTER TABLE database_name.table_name RENAME COLUMN user_email TO email;

These schema changes are also versioned and can be reversed using time travel.

Managing Iceberg Tables

Managing Iceberg tables involves optimizing performance, handling metadata, and ensuring clean-up of old files. Proper maintenance helps Iceberg run efficiently at scale.

Optimization Tips

- Enable File Compaction: This helps merge small files into larger ones. By reducing the number of files, it improves the efficiency of data scans.

- Expire Old Snapshots: Regularly remove outdated snapshots and metadata files to free up storage space and improve query performance.

- Use Metadata Tables: Iceberg provides tables like table_name.snapshots and table_name.history for monitoring and querying metadata.

Common Use Cases

Apache Iceberg is versatile and can be applied in various business scenarios:

- Data Lakehouse: Combine the flexibility of data lakes with features of data warehouses. Iceberg enables a unified data architecture that supports batch and real-time analytics.

- Machine Learning Pipelines: Maintain feature sets and experiment tracking. Iceberg helps data scientists and engineers manage large-scale datasets for ML model training.

- ETL Workflows: Build reliable, restartable data pipelines. Iceberg’s ACID transactions ensure that ETL jobs can be safely retried and monitored.

- Audit and Compliance: Access historical data instantly for reviews. Iceberg’s time travel capabilities make it easy to fulfill compliance requirements by tracking data changes.

Conclusion

Apache Iceberg offers a modern and powerful approach to managing data lakes. By supporting full SQL operations, schema evolution, and time travel, it enables teams to build reliable, scalable, and flexible data systems. Organizations looking for better performance, easier data governance, and engine interoperability will find Iceberg to be a valuable asset. With this guide, any data engineer or analyst can get started using Iceberg and take full advantage of its capabilities.