Natural Language Processing (NLP) uses Bag-of-Words (BoW) as its foundation to represent text data for machine learning applications. This straightforward method is widely employed in document classification, spam detection, and sentiment analysis tasks. The article shows how this model works within NLP contexts and its practical applications over time.

What is the Bag-of-Words Model?

According to the Bag-of-Words model text gets converted into a word collection that omits syntactical rules alongside word placement sequences. Word presence and frequency analysis determines this method which functions as a statistical approach for text evaluation.

Key Characteristics:

Vocabulary-Driven: Relies on a predefined list of unique words from the corpus.

Documents in this method become word count vectors.

Sparse matrices process big datasets through an efficient storage method which retains only recorded non-zero cells.

Step-by-Step Implementation

1. Text Preprocessing

Text preprocessing starts by cleaning raw material to normalize input while diminishing noise volume.

Lowercasing: Converts "Natural Language" → "natural language".

The Tokenization process divides text content into words along with phrases and symbols while applying an example like "NLP-is-fun" becomes ["NLP", "is", "fun"].

Removing stop words such as "the" and "and" improves results (this step should be optional or applied due to its beneficial nature).

Stemming along with Lemmatization reduces word forms into their base forms including "run" when analyzing "running."

Example:

A vital toy belonging to the cat has vanished from its place.

Processed: ["cat", "toy", "missing"]

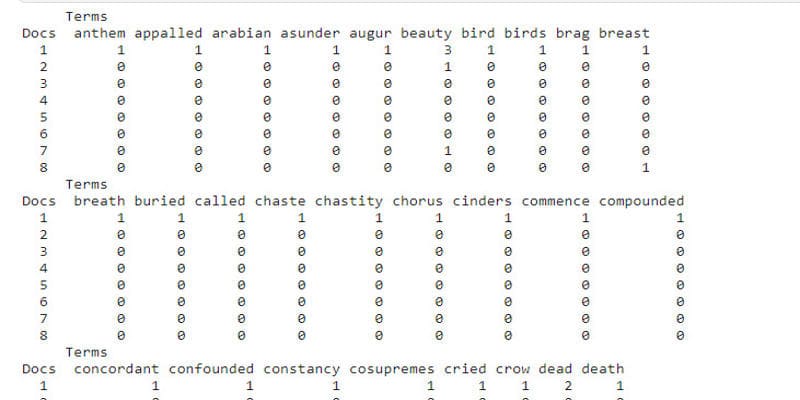

2. Vocabulary Creation

The preprocessing step yields a one-of-a-kind list of terms (vocabulary) from the processed data collection. For a corpus with three documents:

Doc 1: "I love NLP."

Doc 2: "NLP is powerful."

Doc 3: "I enjoy learning NLP."

Vocabulary: ["i", "love", "nlp", "is", "powerful", "enjoy", "learning"]

3. Sparse Matrix Optimization

Libraries like scikit-learn use sparse matrices (e.g., CSR format) to store only non-zero values, reducing memory usage.

Advanced Variations of BoW

Binary BoW

Using this variant the method represents word presence or absence by numbers 1 or 0. Useful for focus on existence rather than frequency.

N-Grams

Captures word sequences to retain context:

Bigrams: "natural language", "language processing".

Trigrams: "I love NLP".

Example Vocabulary with Bigrams:

["natural language", "language processing", "is fascinating"]

Applications in Depth

1. Document Classification

The Naive Bayes classifier receives BoW vectors to determine if an email belongs to the "spam" or "not spam" category by analyzing word patterns.

2. Sentiment Analysis

The review words "excellent" and "terrible" contribute directly to the prediction of positive and negative sentiment through weight-based scoring.

3. Search Engines

BoW acts as an early search algorithm function that matches search terms with document vector representation.

4. Topic Modeling

The BoW approach makes Latent Dirichlet Allocation (LDA) discover topics from massive text collections.

Strengths of BoW

CountVectorizer as part of the Python library platform allows for straightforward BoW application.

Each vector possesses direct correspondence with words that people can understand easily.

Scalability: Handles large datasets efficiently with sparse matrices.

Limitations and Solutions

1. Semantic Blindness

BoW fails to establish any connection between the words "happy" and "joyful."

You can employ Word2Vec or GloVe embeddings as semantic relationship capturing tools.

2. High Dimensionality

A 10,000-word vocabulary creates 10,000-dimensional vectors.

Solution: Apply dimensionality reduction (PCA or TruncatedSVD).

3. Context Loss

The word bank refers to both financial institutions and river stream banks yet remains identical in meaning.

The problem can be solved through either natural language tagging techniques or contextual embedding technology (BERT).

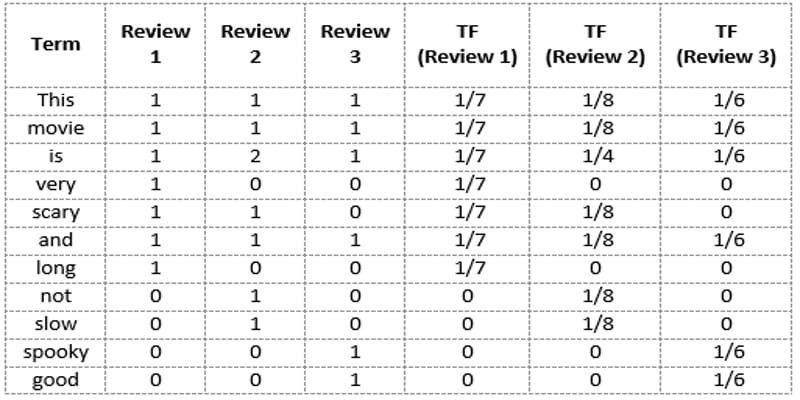

TF-IDF: A BoW Enhancement

The Term Frequency-Inverse Document Frequency (TF-IDF) algorithm modifies BoW since it reduces the emphasis on common vocabulary.

TF: Frequency of a word in a document.

IDF: Penalizes words common across many documents (e.g., "the").

Example:

The word "machine" appears frequently in a tech article (high TF) but rarely in other documents (high IDF), resulting in a high TF-IDF score.

BoW in Modern NLP Pipelines

BERT currently leads the market but Bag of Words functions as a supporting element in the NLP workflows.

Deep learning systems undergo fast preliminary tests as part of their pre-production deployment process.

Feature Engineering exists as a conjunction with embeddings to enable hybrid system design.

Provide clear explanations through basic models to satisfy compliance requirements especially in finance sectors.

Practical Example: Spam Detection

The email dataset consisted of 5,000 instances which were classified as spam or ham.

Preprocessing: Lowercase, remove stopwords, tokenize.

BoW Vectorization: Create 3,000-word vocabulary.

- Logistic Regression received training by applying a classifier to the data.

- Evaluation: Achieves 95% accuracy on test data.

Future of BoW

- Hybrid Models: BoW + Transformers for real-time sentiment analysis.

- Lightweight BoW models operate through edge computing systems to serve IoT devices.

- Multilingual Support: BoW with subword tokenization (Byte-Pair Encoding).

FAQs

1. The main purpose of Bag-of-Words model is to convert unstructured text into numerical vectors.

The BoW model transfigures unstructured content into numerical vectors to make text data suitable for machine learning models that conduct fast analysis. Industry applications depend on BoW as their initial processing level to perform spam identification and sentiment assessment tasks.

2. Does Bag-of-Words consider word order?

The BoW model deletes all information about grammar and syntax and word arrangement because it handles text as an unsorted document. Both sentences "The dog bit the man" and "The man bit the dog" yield equivalent BoW representations.

3. BoW operates with what approach when handling synonyms within the examples of "happy" and "joyful"?

Through its operation BoW fails to recognize synonymy between words and uses an approach that disregards semantic associations. Word2Vec and GloVe embedding techniques resolve this issue through vector-based representation that positions similar words near one another.

4. The high-dimensional output of BoW becomes challenging due to the vast number of unique terms it processes.

The vector dimensions depend on the number of vocabulary elements used. The distribution of zeros in sparse data becomes dominant because corpus sizes containing 10,000 unique words produce 10,000-dimensional vectors.

5. The TF-IDF approach differs from the BoW technique through its evaluation method based on word frequencies.

Conclusion

NLP applications continue to use the Bag-of-Words model as their fundamental approach because of its basic structure and operational speed. BoW continues to serve applications across spam filters and academic research because of its interpretability together with its cost-effective nature although modern approaches improve its effectiveness. Through integration of TF-IDF and N-Grams practitioners can convert the advantages of BoW to effectively counter its drawbacks to establish BoW as a long-lasting tool in NLP software.